- Published on

Software 3.0 Is Here: Reflections on Andrej Karpathy's 'Software Is Changing (Again)'

- Authors

- Name

- Huan Zhang

- @zhkrob

Recently I came across an inspiring talk by Andrej Karpathy titled "Software Is Changing (Again)" (In Y Combinator Youtube channel: Software Is Changing (Again). The talk got me thinking deeply about how the essence of programming and software development is rapidly evolving under the influence of large language models (LLMs). Here are a few key insights and reflections inspired by Karpathy's presentation.

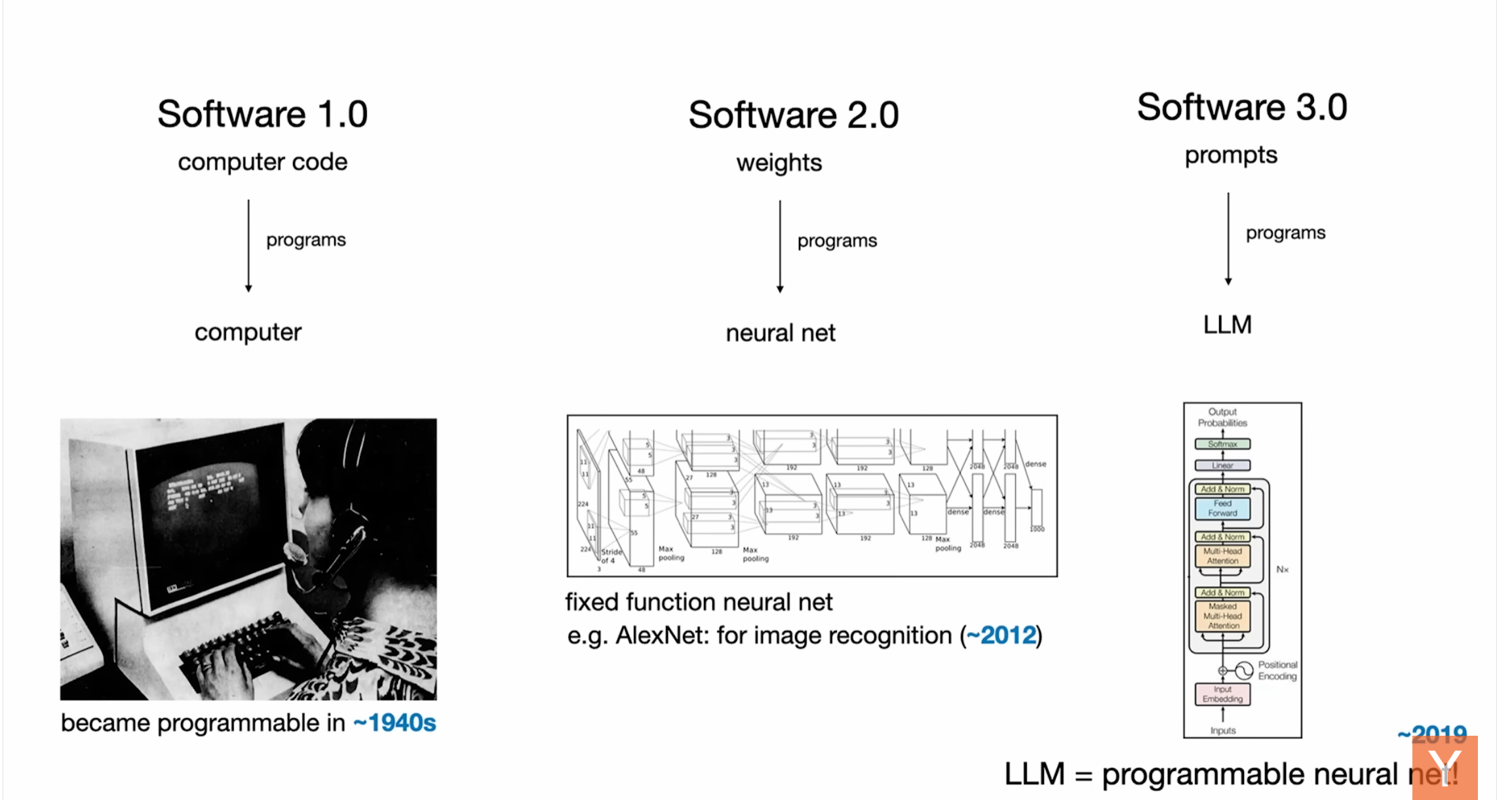

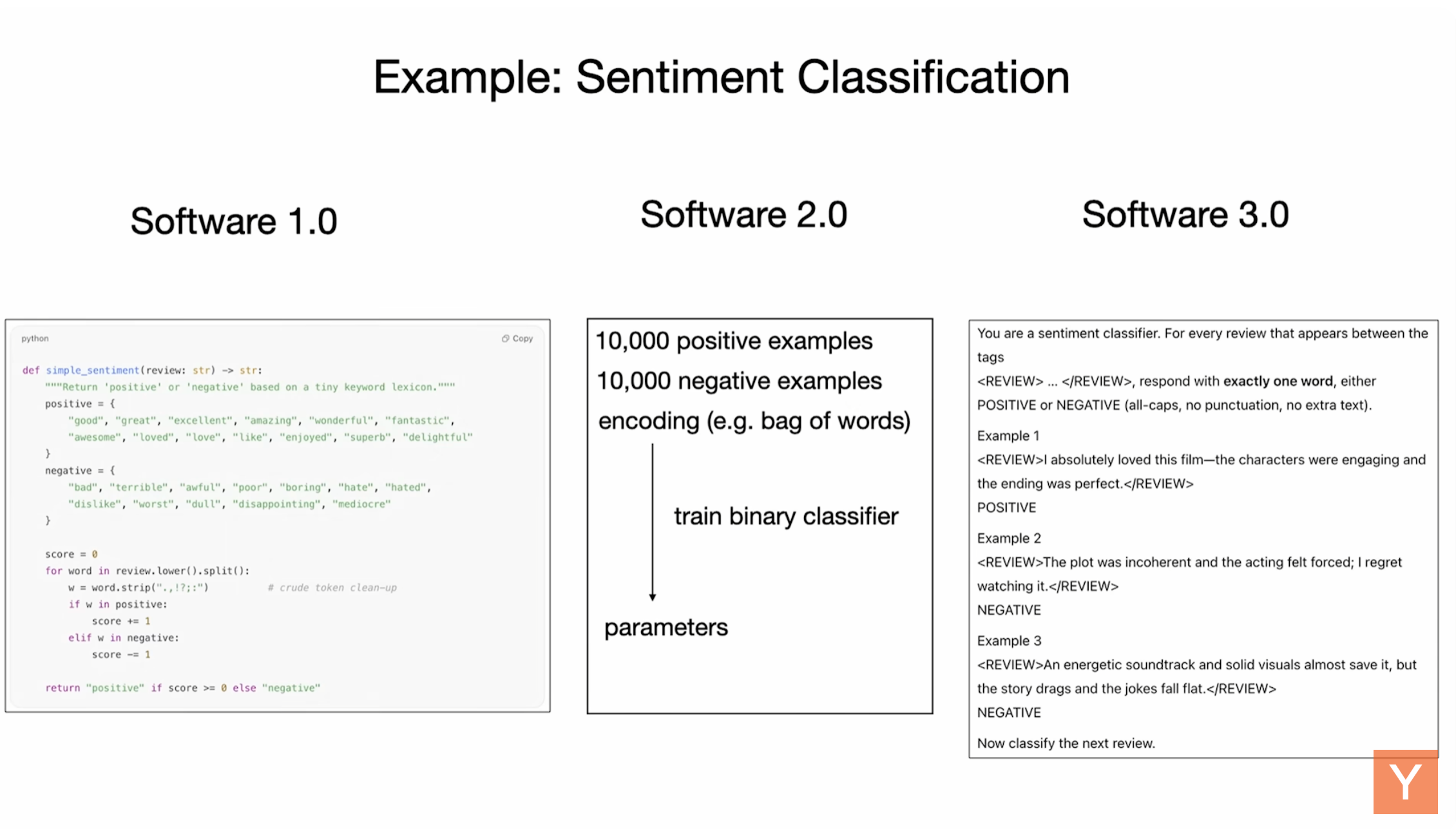

Three Paradigms of Software

Historically, software has been relatively static—mostly hand-crafted by developers using explicit programming languages like Python, Java, or JavaScript. Karpathy labels this traditional mode as Software 1.0, a world of human-written code instructing machines directly.

Then came Software 2.0, powered by deep learning. Instead of manually coding logic, developers became data curators who trained neural networks. The model weights themselves represented the software. This paradigm shift allowed software to solve tasks like image recognition and language understanding that traditional methods struggled with.

Now, we stand at the threshold of Software 3.0. Here, we program software in our own natural language through prompts—plain English instructions—directly interacting with powerful large language models. This radically shortens the distance between an idea and its implementation, effectively democratizing programming to anyone who can express their intent clearly. I feel it is changing to Software 3.0 in my daily work.

Source: Software Is Changing (Again)

Source: Software Is Changing (Again)

LLMs as Operating Systems

One particularly fascinating analogy Karpathy offered was comparing LLMs to early operating systems. Just as operating systems abstracted complex hardware interactions behind simple, intuitive commands, LLMs abstract away complexity by letting us interact through plain language.

But like early computers, today's LLMs remain centralized and costly. We're reminiscent of the 1960s era of computing—expensive mainframes accessed remotely. Personal, local LLM computing is still on the horizon, but early experiments suggest this may soon become feasible.

The Power (and Risk) of Partial Autonomy

Another intriguing concept Karpathy shared is the rise of partially autonomous software—tools that automate parts of our workflow while keeping humans actively involved. Good examples today include apps like Cursor for coding or Perplexity for research. They balance autonomy with human oversight through intuitive interfaces, allowing users to adjust how much control they hand over to the AI.

This idea resonates deeply because it highlights a critical truth: fully autonomous agents still aren't reliable enough to trust blindly. The sweet spot is a collaborative balance—humans guiding, verifying, and curating, with AI amplifying productivity. For this one I think it's pretty true now but maybe there will be some changes in the future.

Thinking About LLM Psychology

Karpathy described LLMs humorously but insightfully as "people spirits," complete with human-like flaws—excellent memory but prone to "hallucinations," brilliant yet easily misled, powerful yet forgetful. Understanding these traits helps us craft more effective interactions. We need to build systems not just to exploit AI strengths, but to carefully mitigate their weaknesses.

Adapting Infrastructure for AI

A particularly practical takeaway is that much of our current digital infrastructure—documentation, APIs, websites—was never designed with AI agents in mind. Karpathy suggested simple but transformative ideas:

- Offering docs in structured Markdown instead of dense HTML.

- Replacing click-heavy instructions with direct API calls.

- Providing simple metadata files (e.g.,

llm.txt) to help AI agents navigate digital content easily.

Small tweaks like these can significantly improve the AI's ability to interact reliably with existing systems.

What This Means for the Future

Reflecting on Karpathy's insights, I believe we're entering a fascinating era of software development, marked by collaboration between humans and machines. Just as graphical interfaces once revolutionized human-computer interaction, natural-language prompting could fundamentally reshape software development, making it more accessible and intuitive.

Yet, we should temper our excitement with caution. Powerful as these models are, they require thoughtful design and vigilant human oversight. Partial autonomy—not total automation—is likely the safest, most productive near-term strategy.

As it cleverly put it, we're not yet at fully autonomous Iron Man suits. Rather, we're carefully building and adjusting partial autonomy sliders. Maybe in the future, writing prompt is just like we writing code today.